HOW TO BUILD A SONGWRITING AI

Author: Wayne Cheng

Introduction

The music industry is embracing the power of AI. From Google Magenta to Amazon DeepComposer, innovative tools are utilizing cutting-edge technology to create music. This article explores the fundamental principles behind building a songwriting AI, demystifying the technology that powers these remarkable applications.

Deep Learning: The Foundation of AI Music

Deep learning, the current gold standard in AI, employs artificial neural networks to map relationships between different data domains. Essentially, it uses a chain of mathematical equations to translate data from one form (X) to another (Y).

This process, known as "automatic feature extraction," allows the AI to learn the key elements of X that correspond to Y. For example, an image recognition AI learns that a picture with fur, ears, and a tail translates to the word "dog."

Deep learning involves two phases: training and prediction (also known as inference). During training, the AI is fed a dataset of X and Y, learning the mapping between them. During prediction, the AI receives new X data and predicts the corresponding Y data.

Autoregressive Models: Unlocking Musical Sequences

Music's inherently sequential nature makes autoregressive deep learning models ideal for AI music applications. These models predict a music note based on the preceding sequence of notes.

Within an autoregressive model, a sequence of notes is represented as an internal state. Each note updates the state, evolving the model's understanding of the musical sequence.

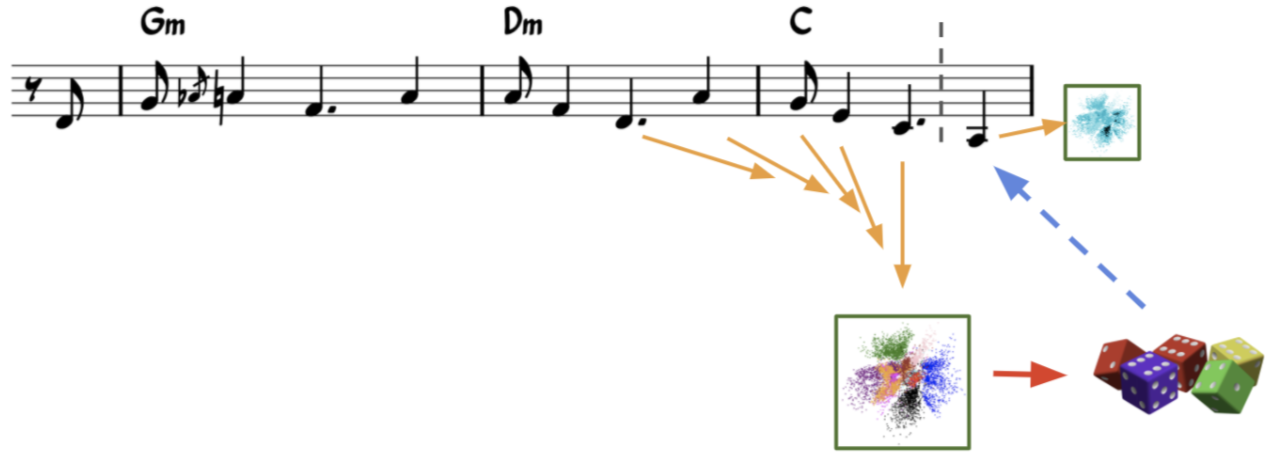

During prediction, the AI converts the internal state into a probability distribution, essentially a set of weighted dice. Rolling this dice determines the next note in the sequence, which is then fed back into the model to update the state. This iterative process allows the AI to generate a continuous musical progression.

Popular autoregressive AI architectures include:

RNN

RNN with attention mechanism

Transformer

Building an AI Music Composer: The Autoencoder Architecture

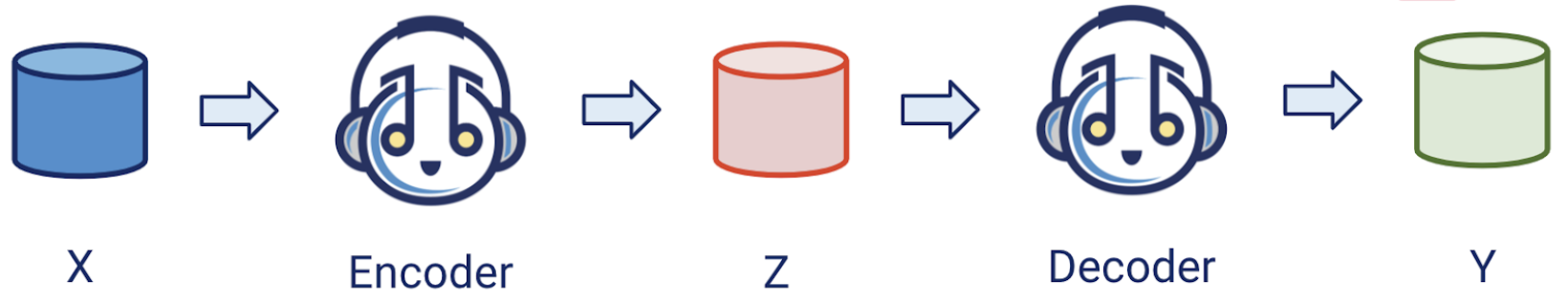

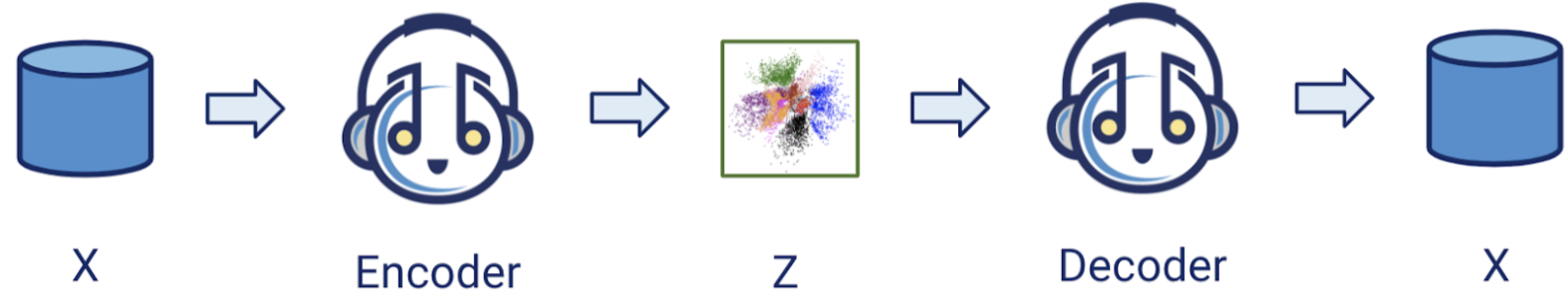

The autoencoder architecture is commonly employed for AI music creation tools. It utilizes two components: an encoder and a decoder.

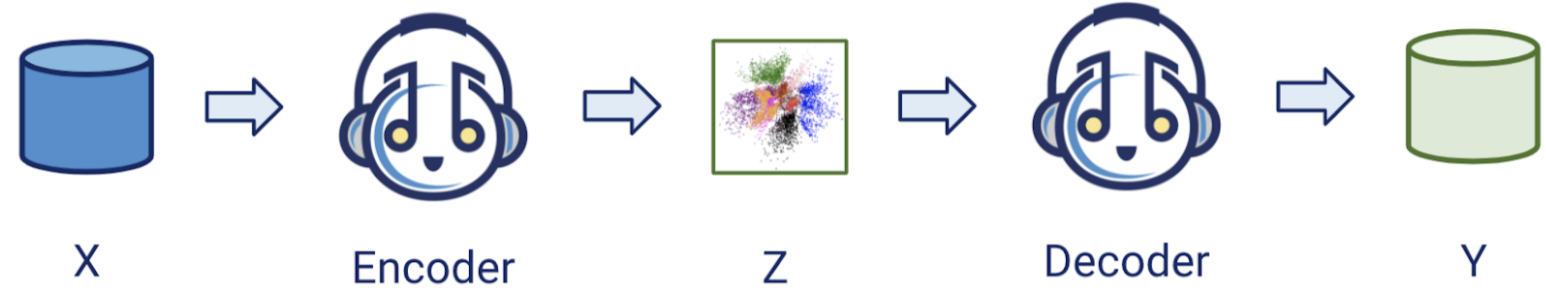

The encoder maps data from X to Z, where Z represents the essential features of X. The decoder then maps Z back to Y.

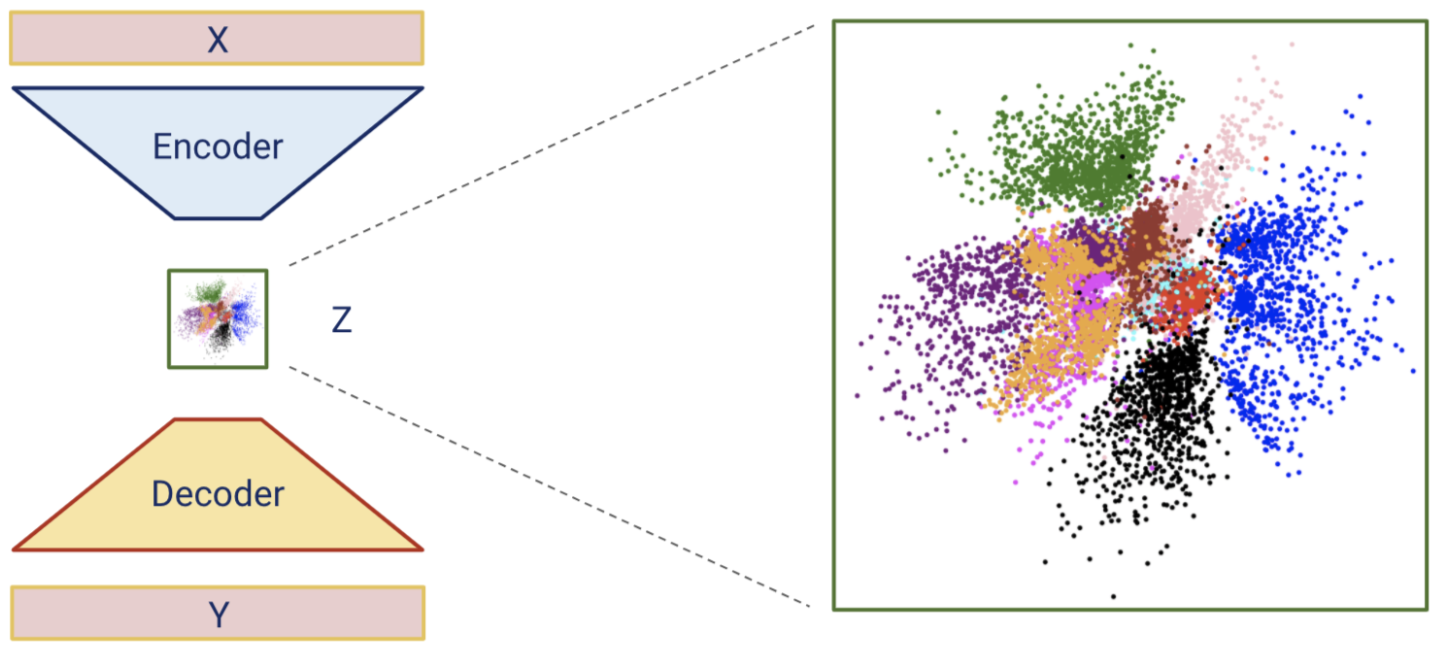

During training, the AI maps music with similar features to similar locations in the Z space. For instance, it may cluster songs by the Beatles and Mariah Carey in distinct regions.

One training technique for autoencoders is reconstruction. The AI maps X to Z and then Z back to X, effectively reconstructing the original data.

For prediction, we can discard the encoder and utilize the decoder to generate music based on Z. Selecting a random point in the Z space results in new music. Choosing a point between the Beatles and Mariah Carey clusters produces a blend of both artists' styles.

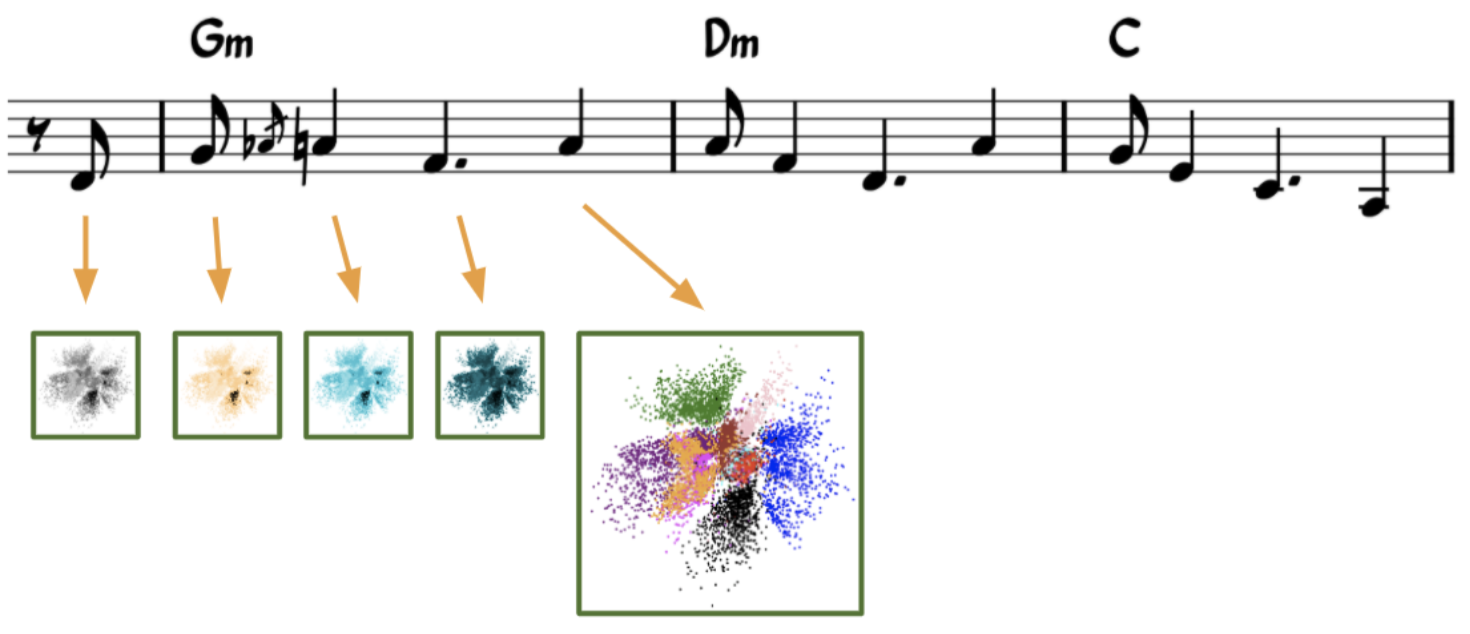

Another technique is transformation. In both training and prediction, X is mapped to Z, and then Z is mapped to Y. This allows control over how features are represented in Z, enabling contextual music generation.

With this approach, music can be created from various contexts, including text, chord progressions, or even melodic motifs.

Post-Processing AI-Generated Music

While powerful, AI-generated music requires post-processing to overcome inherent limitations.

Firstly, plagiarism is a concern. A plagiarism checker can be implemented to identify and discard instances of note sequences copied directly from the training dataset.

Secondly, AI-generated music exhibits a wide range of quality. To address this, a music critic can be used to rank the generated output based on its aesthetic appeal.

Conclusion

Songwriting AI leverages deep learning technologies like autoregressive models and autoencoder architectures to create music. Post-processing with plagiarism checkers and music critics ensures the artistic integrity of the generated output.

About the Author

Wayne Cheng is the founder and AI app developer at Audoir, LLC. Prior to founding Audoir, he worked as a hardware design engineer for Silicon Valley startups and an audio engineer for creative organizations. He holds an MSEE from UC Davis and a Music Technology degree from Foothill College.

Further Exploration

To experience the sound of AI-generated music, visit our website to explore examples of AI-written songs and free AI songwriting tools.

Copyright © Audoir, LLC 2025.